Google recently dropped a prompt engineering whitepaper packed with practical techniques for getting better results out of language models.

If you’ve ever felt like your AI responses were a little off, this cheat sheet might be what you need.

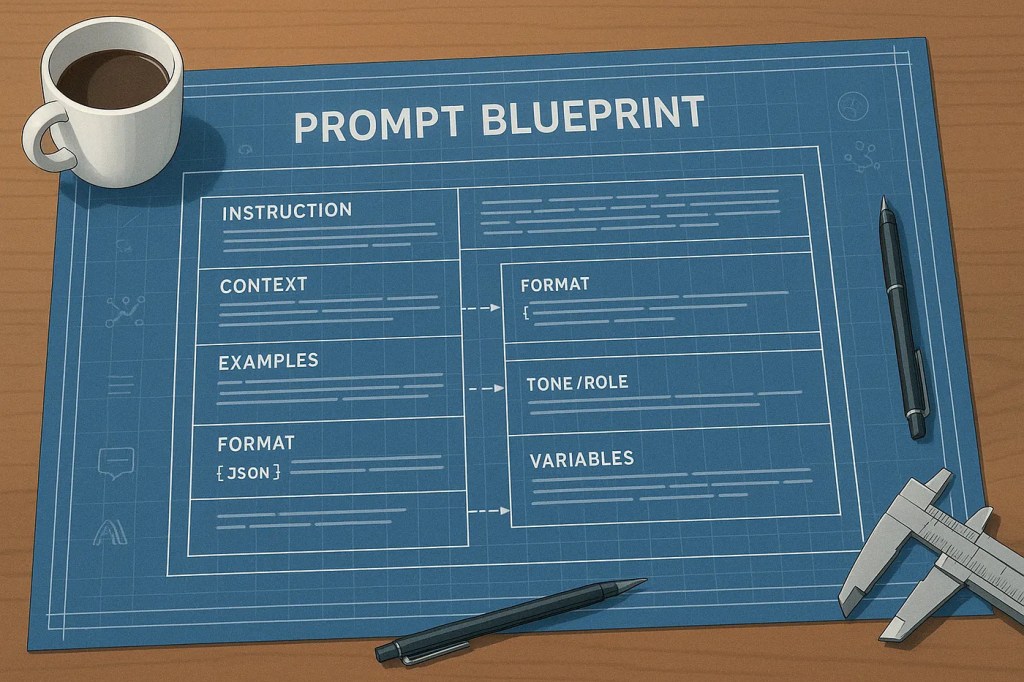

Prompting techniques

Start simple. For straightforward tasks, zero-shot prompting (no examples, just direct questions) often works wonders.

Need structure or style? One-shot or few-shot prompting guides your AI by providing clear examples to follow. This gives the model context without overwhelming it.

Want precision? System prompting clearly defines your expectations and output format, like JSON. No guesswork needed.

Looking to add personality? Role prompting assigns a voice or tone — “Act as a coach,” or “be playful.” It transforms generic outputs into engaging conversations.

Got a complex situation? Contextual prompting gives background and constraints. It steers the AI exactly where you need it to go.

Feeling stuck? Step-back prompting helps the AI take a broader view before narrowing down to specifics, improving clarity and creativity.

Facing intricate logic or math? Chain of Thought (CoT) prompts the AI to reason step-by-step, making complex tasks manageable.

Want accuracy? Use self-consistency — run multiple CoT iterations and select the most common answer. More tries, fewer errors.

Need diverse reasoning paths? Tree of Thoughts (ToT) explores multiple routes simultaneously, ideal for tough, open-ended problems.

Best practices

Always provide examples — this alone can drastically improve results.

Keep prompts simple, clear, and structured. Complexity is your enemy.

Specify your desired output explicitly, format and style included.

Favor clear instructions (“return JSON”) over negative constraints (“don’t return text”).

Control output length — too much detail wastes tokens; too little loses nuance.

Use variables in your prompts. It enhances reusability and integration.

Test different prompt formats — questions, instructions, statements. Discover what clicks.

Randomize example order in few-shot scenarios. It prevents bias.

Always track your prompts. Note changes and learn from experiments.

Adapt quickly to updates. AI evolves, and your prompts should too.

Model settings

Control your output length. Precise is nice; excess costs.

Adjust temperature wisely:

0= straight-laced, perfect for tasks with one right answer0.7= balanced, creative but still grounded0.9+= wild, fun, unpredictable

Tweak Top-K and Top-P (nucleus sampling) to balance safety and creativity:

- Low = safe and reliable

- High = diverse and surprising

Tune these to sculpt the vibe of your output.

Here’s a reliable starting point:

temperature=0.2top_p=0.95top_k=30

You’ll get coherent, yet creative results.

Some times, you don’t need a bigger model. Just better prompts.

The whitepaper:

https://drive.google.com/file/d/1AbaBYbEa_EbPelsT40-vj64L-2IwUJHy/view

Feel free to reach out to me if you would like to discuss further, it would be a pleasure (honestly):

Leave a comment